- #Using gpu with docker on kubernetes how to

- #Using gpu with docker on kubernetes install

- #Using gpu with docker on kubernetes code

#Using gpu with docker on kubernetes how to

|=|Īnd when I use this docker-compose: services:Īnd exec nvidia-smi after running it i get the same response.īut when i replace the image in the docker-compose to python:3.8.8-slim-buster like in the Dockerfile, i get this response: OCI runtime exec failed: exec failed: container_linux. I should note that due to the 'alpha' state of GPU support in Kubernetes, the following run-through on how to connect OpenShift, running on RHEL, with an NVIDIA adapter inside an EC2 instance, is currently unsupported.

#Using gpu with docker on kubernetes code

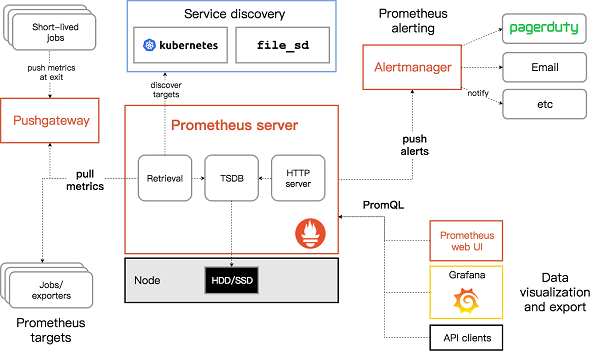

Along with this scheduling and deployment, you can utilize other open source tooling in the Kubernetes ecosystem, such as Pachyderm, to make sure you get the right data to the right TensorFlow code on the right type of nodes (i.e. By Shingo Omura In General Kubernetes is today the most popular open-source system for automating deployment, scaling, and management of containerized applications. | GPU GI CI PID Type Process name GPU Memory | Using Kubernetes and Pachyderm to schedule tasks on CPUs or GPUs. These issues can be resolved using Kaniko. So, mouting docker.sock to host will not work in the future, unless you add docker to all the Kubernetes Nodes. It is a big security concern and it is kind of a open door to malicious attacks. | N/A 45C P8 N/A / N/A | 301MiB / 1878MiB | 14% Default | The Docker build containers runs in privileged mode. | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. The first step is to enable an experiment. When I build it from this Dockerfile: FROM python:3.8.8-slim-busterĪnd then run it with "–gpus all" flag and exec nvidia-smi i get a proper response: Sat Jun 19 12:26:57 2021 With the knowledge of what Docker needs to be able to run a GPU-enabled container it is straightforward to add this to Kubernetes. This manifest is provided as part of the NVIDIA device plugin for Kubernetes project. I want to run a container based on python:3.8.8-slim-buster that needs access to the GPU. Create a namespace using the kubectl create namespace command, such as gpu-resources: kubectl create namespace gpu-resources Create a file named nvidia-device-plugin-ds.yaml and paste the following YAML manifest.

K8s container used docker but not nvidia-docker,so how can i to do this by the same way ,can you help me ? i want to know how to run gpu on k8s, not just a demo or a test yaml to prove k8s support gpu. This book on Kubernetes, the container cluster manager, discusses all aspects of using Kubernetes in today's complex big data and enterprise applications, including Docker containers. but a new question coming: should i use docker to run on cpu,and nvidia-docker on gpu? i just run on gpu only on docker, maybe nvidia-docker, but how to run gpu on k8s. I pull gpu-image: gcr.io/tensorflow/t ensorflow:0.11-gpu, and run mnist.py demo according to docker, docker run -it $ /bin/bash Then, i found another way: nvidia-docker Kubernetes on NVIDIA GPUs includes support for GPUs and enhancements to Kubernetes so users can easily configure and use GPU resources for accelerating workloads such as deep learning.

because every node has only one gpu card, i think it works.īut question is coming: how to run on gpu? this example only prove that k8s can get gpu,and know gpu,but how to run on it? how can i use yaml file to create one pod run on gpu resource? Kubernetes is an open-source platform for automating deployment, scaling and managing containerized applications. and describe found : no node can satisfied this. every node has a gpu card, only master hasn't Problem with using GPU with Docker-compose 19th June 2021 docker, docker-compose, nvidia-docker I want to run a container based on python:3.8.8-slim-buster that needs access to the GPU. I tested many examples you said,but still failed Installing Kubernetes Kubernetes on NVIDIA GPUs DU-09016-001v1.0 4 3.1.

#Using gpu with docker on kubernetes install

When use cpu, it's ok, but can't work on gpu,i want to know why. If you are setting up a single node GPU cluster for development purposes or you want to run jobs on the master nodes as well, then you must install the NVIDIA Container Runtime for Docker. Our platform based on tensorflow and k8s。it is for training about ML. The Tekton Catalog provides a kaniko Task which does this using Google’s kaniko tool. The next function that the pipeline needs is a Task that builds a Docker image and pushes it to a container registry.

K8s:v1.3.10 cuda:v7.5 kernel version:367.44 t ensorflow:0.11 gpu:1080 Create a Task to build an image and push it to a container registry. Now i have almost one week to work on this question.

0 kommentar(er)

0 kommentar(er)